Text generation Web UI 2.6

Text Generation Web UI - Your Personal AI Playground

Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs). If you’ve ever wished you could run your own ChatGPT-style setup without worrying about sending your data to the cloud—this is it.

Built with Gradio, (a Python library that lets you quickly build web-based user interfaces for machine learning models.) it provides an easy-to-use web-based chat and text generation environment, giving you full control over prompts, model selection, and output without relying on cloud services. Whether you’re testing AI models, building bots, or exploring natural language generation, it offers flexibility and privacy—making it ideal for developers, researchers, or hobbyists who want to experiment with LLMs locally.

The interface supports multiple backends, including Hugging Face Transformers, llama.cpp, ExLlamaV2, and even NVIDIA’s TensorRT-LLM through Docker. You can load models, fine-tune with LoRA, switch between chat modes, and access OpenAI-compatible APIs—all from one place. Built-in extension support adds even more functionality, from multimodal features to streaming capabilities. Everything runs in your browser, offering a clean, responsive way to interact with the models you’re working on.

What sets this tool apart is the level of control it gives users over their text generation environment. You’re not locked into one model or backend, and you don’t need internet access to run powerful AI models. It’s fully customizable, supports API usage, and makes it simple to test, tweak, and deploy models for writing, research, coding, or content creation—all while keeping your data local and private.

What Can You Actually Do With It?

Run LLMs locally—no internet or OpenAI API needed

Swap between models without restarting

Fine-tune models with LoRA support

Use OpenAI-compatible APIs for chat or completion

Load up multiple extensions for extra features like streaming or multi-modal tasks

Save chat histories and replay past conversations

Tweak advanced generation settings for output that fits your project

Text generation Web UI in built for testing custom models, building chatbots, writing stories, or automating content. If that is what you are looking for, this tool fits right in.

How to Install & Run It

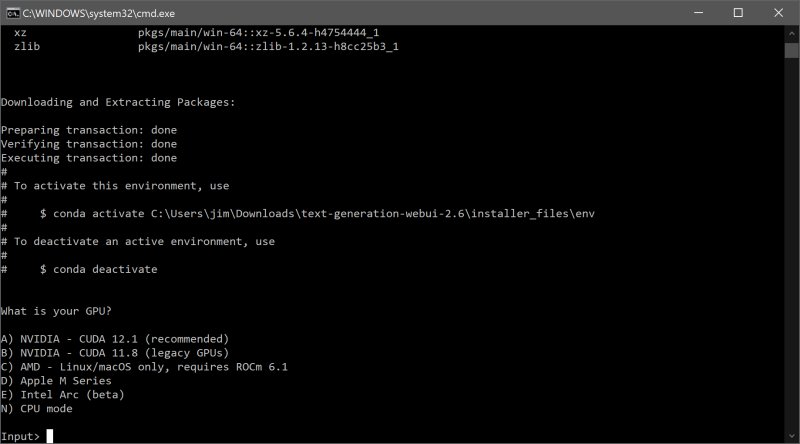

OK, this is Python and DMS-based stuff here at first, and some may not be that comfortable, but it's not hard. First, make sure you have enough space. The file is only 28MB, but after all the installed downloads needed - this install was closer to 2Gigs by the time it was done. Download the zip file from above and unzip it into the folder you want it to live. Open the folder, choose the correct file for your operating system. and double-click it.

Windows: start_windows.bat

macOS: start_macos.sh

Linux: start_linux.sh

Select your GPU vendor. If you don't know your GPU (graphics card), choose N for CPU. (why N? No idea.) The program will do its thing; it will take a while, but you only have to do it once. Go grab a coffee. When the program is done, open your your browser and type http://localhost:7860 in the address bar.

TADA! You’re ready to generate text, chat, or run models.

Ok, but how do you run a model?

Text Generation Web UI is essentially a web interface for an AI model tht you want to run. So, once installed you need to go get an AI model to run it. Thankfully, downloading a model for Text Generation Web UI is pretty straightforward. You can grab models directly from Hugging Face or similar sources manually or using the built-in download script. If you’re using the script, just run

python download-model.py organization/model-name,

and the program handles the rest. Once downloaded, the model needs to go inside the text-generation-webui/models folder where you unzipped the program elier. Some models come as a single .gguf file—just drop that file straight into the models folder. Others, like full Transformers models, include multiple files and need their own subfolder. In that case, create a new folder inside models, give it a name that makes sense, and place all the model’s files there. After that, fire up the web UI, and the model should show up, ready to load and run.

If you are looking for AI model recommendations, you could start with Mistral 7B https://huggingface.co/mistralai/Mistral-7B-v0.1 if you have a lot of space and power or Tiny Llama https://huggingface.co/TinyLlama/TinyLlama-1.1B-Chat-v1.0 if you have less horsepower in your desktop.

Pros and Cons

Pros:

Fully local, private AI sandbox

Supports multiple backends and extensions

OpenAI API compatibility for easy integrations

Swap models on the fly

Great for testing, dev work, or just playing with AI

Cons:

Can be resource-heavy depending on the model

Manual setup has a learning curve

Some extensions require extra installs

Geek Verdict

Text Generation Web UI is the kind of tool every AI enthusiast will love to mess around with. It gives you the power to run large language models locally, customize everything, and never worry about sending data out to third parties. Perfect for anyone building tools, writing, testing models, or just curious about what LLMs can do when there’s no cloud involved. Set it up once, and it’ll become your AI learning lab—right on your machine. If you are into these sorts of things and have some disk space and power, it's definitely worth the download. Plus, the author's name is awesome.

Text generation Web UI 2.6

Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).