The Oobabooga Text Generation Web UI has reached version 2.8, providing a locally hosted, customizable environment for utilizing large language models (LLMs). This web interface allows users to operate models similar to ChatGPT without transmitting data to the cloud, ensuring privacy and control. Built using the Gradio library, it offers a user-friendly chat and text generation platform, making it suitable for developers, researchers, and hobbyists interested in AI experimentation.

The interface supports multiple backends, such as Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM, allowing users to load models, fine-tune them with LoRA, switch chat modes, and access OpenAI-compatible APIs—all seamlessly through a browser. It includes built-in extensions for enhanced functionality, including multimodal and streaming features.

Key features of Oobabooga include the ability to run LLMs locally without an internet connection, swap models easily, fine-tune with LoRA, and save chat histories. It is designed for various applications, including testing custom models, building chatbots, and automating content creation.

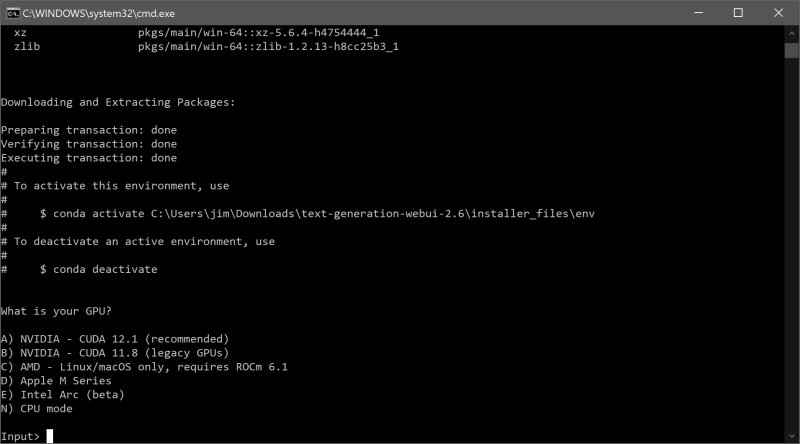

Installation involves downloading a zip file (approximately 28MB, but may require up to 2GB of space after installation) and running the appropriate script for your operating system. Users can easily acquire models via Hugging Face or other sources, with instructions on placing them in the correct directory for use.

While Oobabooga provides numerous advantages, such as maintaining user privacy and allowing model swapping, it can be resource-intensive and requires some technical knowledge for setup. Nevertheless, it caters to AI enthusiasts who wish to explore LLMs without reliance on cloud services, making it an invaluable tool for anyone interested in artificial intelligence.

Overall, Oobabooga Text Generation Web UI is not just a tool; it's an AI playground that empowers users to experiment and innovate with language models directly on their machines. For those willing to delve into the setup process, it offers a rich environment for learning and exploration in the realm of AI.

In extending the discussion, it's worth noting that as AI technology continues to evolve, tools like Oobabooga will likely become increasingly invaluable for researchers and developers. The ability to run complex models locally may foster a new wave of innovation in AI applications, potentially leading to advancements in fields such as natural language processing, creative writing, and automated customer service. Furthermore, as concerns about data privacy grow, solutions that prioritize local processing and user control will become more appealing to both individuals and organizations

The interface supports multiple backends, such as Hugging Face Transformers, llama.cpp, ExLlamaV2, and NVIDIA’s TensorRT-LLM, allowing users to load models, fine-tune them with LoRA, switch chat modes, and access OpenAI-compatible APIs—all seamlessly through a browser. It includes built-in extensions for enhanced functionality, including multimodal and streaming features.

Key features of Oobabooga include the ability to run LLMs locally without an internet connection, swap models easily, fine-tune with LoRA, and save chat histories. It is designed for various applications, including testing custom models, building chatbots, and automating content creation.

Installation involves downloading a zip file (approximately 28MB, but may require up to 2GB of space after installation) and running the appropriate script for your operating system. Users can easily acquire models via Hugging Face or other sources, with instructions on placing them in the correct directory for use.

While Oobabooga provides numerous advantages, such as maintaining user privacy and allowing model swapping, it can be resource-intensive and requires some technical knowledge for setup. Nevertheless, it caters to AI enthusiasts who wish to explore LLMs without reliance on cloud services, making it an invaluable tool for anyone interested in artificial intelligence.

Overall, Oobabooga Text Generation Web UI is not just a tool; it's an AI playground that empowers users to experiment and innovate with language models directly on their machines. For those willing to delve into the setup process, it offers a rich environment for learning and exploration in the realm of AI.

In extending the discussion, it's worth noting that as AI technology continues to evolve, tools like Oobabooga will likely become increasingly invaluable for researchers and developers. The ability to run complex models locally may foster a new wave of innovation in AI applications, potentially leading to advancements in fields such as natural language processing, creative writing, and automated customer service. Furthermore, as concerns about data privacy grow, solutions that prioritize local processing and user control will become more appealing to both individuals and organizations

Oobabooga Text Generation Web UI 2.8 released

Oobabooga Text Generation Web UI is a locally hosted, customizable interface designed for working with large language models (LLMs).