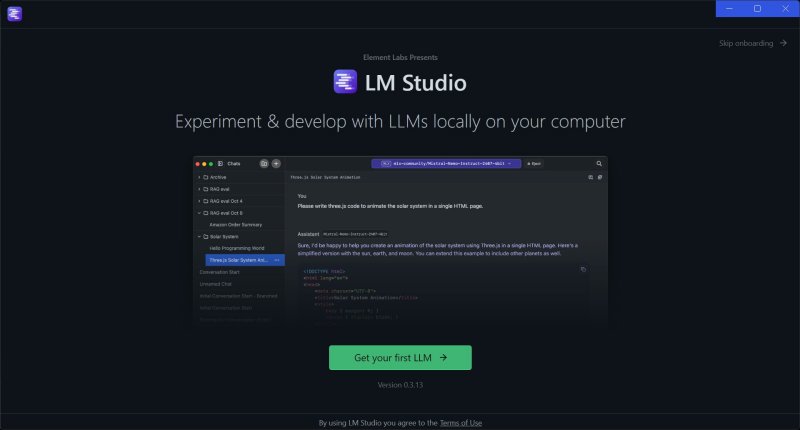

LM Studio 0.3.13

LM Studio: Run Large Language Models on Your PC—No Cloud, No Problem

LM Studio is the tool for folks who want to run powerful AI models like LLaMA, Mistral, and others directly on their own hardware—offline. If you've ever wanted ChatGPT-level performance without sending your data into the cloud, this is the app you've been waiting for.

Why Would You Want LM Studio?

Privacy and control are huge these days. With LM Studio, nothing you type gets sent off to random servers or third-party APIs. Your conversations, prompts, and any testing you do stay local. If you're a developer, researcher, or just a curious user playing around with AI models, this means complete control over your data. No subscriptions, no tokens, and no waiting for someone else's servers to spin up.

This setup is also perfect for experimenting with custom AI models in creative projects, software development, or building tools like chatbots and code assistants—right from your machine. It's an ideal option for writers, coders, and anyone who wants to tinker with AI without needing a Ph.D. in machine learning or getting locked into OpenAI's or other ececosystem.

Hardware Requirements

LM Studio is powerful, but it's not magic—you'll need decent hardware to get the most out of it. Here's what to expect:

CPU Only: You can run models with just your CPU, but performance will vary. Small models (like 7B parameters) are usable but not blazing fast.

GPU Support: For better speed, you'll want a modern NVIDIA GPU with at least 8GB of VRAM. The more VRAM, the bigger the model you can load.

Memory: Plan for at least 16GB of RAM for smooth operation, especially with larger models.

Storage: SSD recommended—those model files can hit 10-20GB or more, depending on what you download.

If you're packing a gaming rig or a workstation, you're golden. Laptops with beefy GPUs work too, but don't expect miracles on an old business laptop.

What Can You Do With It?

The short answer? nearly anything. LM Studio acts as your AI sandbox where you can:

Run local chatbots that don't phone home

Test and fine-tune models for projects

Analyze documents or datasets offline

Build coding assistants without needing Copilot nd exposing your source code.

Experiment with roleplay bots, NPCs, or writing partners

Create AI tools tailored to your specific job or workflow without sharing them with the rest of the world.

Because it runs locally, LM Studio is perfect for industries where data privacy is non-negotiable like law, healthcare, finance, or just paranoid power users who don't trust sending sensitive info across the web.

Whats an AI Model?

AI models are trained differently based on the type of data they're fed and the tasks they're meant to handle. Some are built for general conversations, others for coding, summarizing long documents, creative writing, or even roleplaying as characters. Something like ChatGPT is trained on everything, which makes it a jack of all trades -but a master of none.

There is also size to consider. DeepSeek is something like 150 Gig. That's huge and would take a ton of storage and processing power to run.

But if you only really needed something like a text-to-speech model - it might only be a Gig and way easier to manage for your PC.

Choosing a specific model gives you better results because it's been fine-tuned for that job. Kind of like picking the right tool for a task—you wouldn't use a sponge to hammer a nail, right?

Where Do You Get Models for LM Studio?

LM Studio has a built-in model downloader that pulls directly from Hugging Face, the most popular repository for open-source AI models. It's reputable, updated constantly, and loaded with models for coding, writing, chatting, roleplaying, you name it. You can even preview model performance or read user reviews before downloading. I You don't have to go digging through shady websites or GitHub pages hoping something works. Just search, download, and load right inside the app. Once downloaded, LM Studio handles the rest—no CLI commands or Python environments needed. Just click, load, and chat.

This combo of local control and easy model access is why LM Studio stands out. You're not locked into one AI model or platform. You're free to test, switch, or run multiple models depending on what you're working on that day.

NOTE:[//b] Hugging Face is packed with free models, but not every model is free for everything. Always skim the license before you build anything serious. LM Studio doesn’t force you to check—so it’s up to you to play it smart.

Pros and Cons

Pros: No internet required—your data never leaves your machine Supports a wide range of models, including Mistral, LLaMA, Phi, and more User-friendly GUI for loading models and chatting instantly Runs on Windows, Mac, and Linux Perfect for offline development, testing, and creative projects

Cons: High system requirements for large models GPU needed for best performance Model downloads can eat up SSD space fast Casual users might face a learning curve understanding model sizes and quantization

Geek Verdict

LM Studio is the dream setup for anyone curious about AI who doesn't want Big Tech looking over their shoulder or nonsensical guard rails. If you've got the hardware, LM Studio turns your PC into a private AI lab. No logins, no API keys, no third-party servers. Just raw AI power at your fingertips and on your terms.

For devs, tinkerers, and privacy geeks, LM Studio is one of the smartest tools you'll download this year. It's not the lightest app around, but what you get in return is total freedom to experiment and build using this generations revolitionary tech. Grab it if you're ready to take AI into your own hands.

LM Studio 0.3.13

LM Studio is the tool for folks who want to run powerful AI models like LLaMA, Mistral, and others directly on their own hardware—offline.