KoboldCPP 1.86.2

KoboldCPP: An Easy Way To Run a Private AI Chatbot on Your PC

Looking for a way to use AI without relying on the cloud? KoboldCPP is a great choice for running powerful AI models on your computer with no accounts, internet, subscriptions, or privacy trade-offs.

If you want to work on private business projects, write stories, build game lore, or experiment with AI in a more secure and personal way, KoboldCPP lets you do it all offline using local large language models (LLMs). The best part is that KoboldCCP makes it easier to do than you would think. With a small learning curve and a few clicks, you should be up and going.

What Is KoboldCPP?

KoboldCPP is a super lightweight, open-source tool that runs GGUF-format AI language models on your local system. It acts as a backend for the KoboldAI web interface, giving you a friendly chat window that feels like using ChatGPT—but it's all happening on your computer.

You download a model file, launch KoboldCPP, and start chatting. It supports CPU and GPU acceleration, making it flexible for different hardware setups.

What Is GGUF and What Models Work With KoboldCPP?

What the GGUF are we talking about? GGUF format is AI lingo. It is short for GPT-Generated Unified Format. This newer format is designed specifically for local use. It's fast, lightweight, and works well with quantized models (like 4-bit or 8-bit), which are easier to run on mid-range PCs. Most of the popular open-source models on Hugging Face (Model repository) are available in GGUF

It does not support GPTQ, Safetensors, or OpenAI's proprietary models. If the model isn't GGUF and LLaMA-style, it won't run in KoboldCPP. So before downloading a model, double-check that it's in .gguf format and designed for text/image generation.

Who Is KoboldCPP For?

KoboldCPP is great for anyone curious about AI but not interested in paying for or handing their data over to cloud services. (Why is it that if some stole our data, we would be pissed, but if we pay people to steal our data, we are cool with it? Discuss below. )

It's ideal for writers looking to co-write stories or generate dialogue, RPG creators who want AI-powered NPCs, developers tinkering with local AI setups, or just curious users who want a ChatGPT-like experience that runs privately and offline. It appeals to people in low-connectivity environments or anyone tired of rate limits and monthly fees. If you want control over how AI runs and what it knows, this tool gives it to you.

Main Features of KoboldCPP

Run LLMs Locally: Works with GGUF/LLAMA-based models on your PC

Offline Mode: Fully private, no internet or cloud server required

Fast Performance: Supports Intel oneAPI and NVIDIA CUDA for GPU acceleration

Simple Setup: No complex installs or coding needed—just unzip and launch

KoboldAI Web UI Compatible: Use popular features like memory, world info, and character cards

Customizable: Load LoRA adapters, tweak context size, and personalize your chat

Where to Get AI Models for KoboldCPP

KoboldCPP doesn't include AI models, so you'll need to download one separately. Think of it like downloading a brain for your assistant. Huggingface is probably the top spot for models, but plenty of places are popping up every day. Just make sure you trust where your are getting them. When choosing a model, stick with 4-bit or 8-bit GGUF versions if you're

using a mid-range PC.

How to Use a Model with KoboldCPP

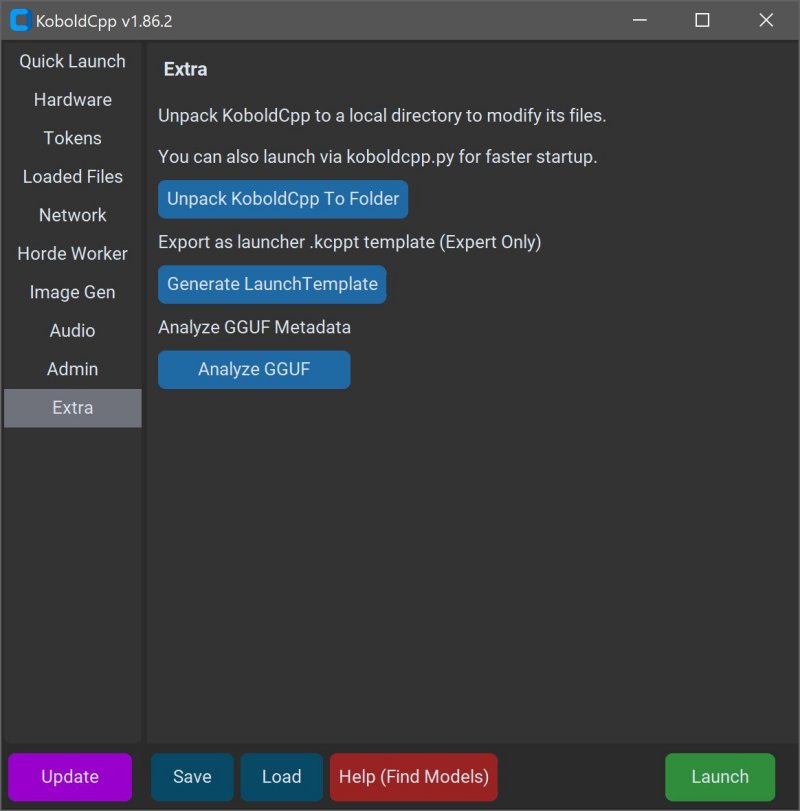

Once you've downloaded a model, using KoboldCCP is simple:

1- Launch KoboldCPP.exe

2- Select your .gguf model file

3- Choose a backend (CPU, CUDA for NVIDIA, or oneAPI for Intel GPUs)

4- Adjust settings (context size, memory use, etc.)

5- Click Start—a local web chat will open in your browser

6- Start chatting like you would with any AI chatbot

You can also enable features like story memory, worldbuilding, and character cards inside the KoboldAI interface. Everything stays offline.

System Requirements

You don't need high-end gear, but here's what works well:

OS: Windows or Linux

RAM: 8GB minimum, 16GB+ recommended

CPU: Modern Intel or AMD with AVX2 support

GPU (optional): NVIDIA or Intel GPU for faster performance

If you have a modern PC you should be good. We ran text generation models on an 16-gig memory machine with an I7-6700 and GTX 750 Ti just to see if we could and it went pretty well. But the old box really struggled with image generation. So Judge from there.

Things to Keep in Mind

The first time you download and run a model, it might feel slightly technical to you. New things can be scary sometimes. But once it's set up, it's smooth and fast. Bigger models need more RAM, so start with smaller 4-bit versions and upgrade later if needed, and your system supports it. Also, the LLM's can be very large. So make sure you have enough space on the old hard drive before you get going.

You'll notice multiple versions tailored to different hardware configurations. Here's a breakdown to help you choose the right one:

Standard Version suitable for most users. It supports NVIDIA GPUs and modern CPUs.

No CUDA Version smaller build that excludes CUDA support. Ideal if you don't have an NVIDIA GPU and prefer a more compact executable.

Old CPU Version designed for systems with older CPUs lacking advanced instruction sets. If the standard version doesn't run on your machine, this is the alternative to try.

CUDA 12 Version optimized for newer NVIDIA GPUs compatible with CUDA 12. This version is larger but offers slightly better performance on supported hardware.

For Linux users, appropriate binary files are available corresponding to the Windows versions mentioned above. The Mac version is specifically built for modern Mac systems with M1, M2, or M3 chips. Each version is designed to leverage specific hardware capabilities, ensuring optimal performance on a wide range of systems.

Geek Verdict

KoboldCPP gives you full control over your AI experience no internet required, no data leaks, and no monthly fees. It really is one of the easiest ways to start if you've ever wanted your own private ChatGPT-style assistant. #Jarvis. It's fast, flexible, and backed by a strong open-source community.

For writers, gamers, or anyone tired of relying on the cloud, KoboldCPP is one of the best ways to bring AI right to your desktop. If you want help picking your first model or customizing your setup, just ask. We're happy to guide you through it.

KoboldCPP 1.86.2

KoboldCPP is a great choice for running powerful AI models on your computer with no accounts, internet, subscriptions, or privacy trade-offs.